Philosophy of Science¶

- Early 20th Century: Karl Popper, paraphrasing "A Scientific statement is any statement that can be falsified."

- Later, people figured out this was not the right way to think about it. And so...

- Late 20th Century: "A scientific statement is any statement to which we can assign a probability."

(Empirical) Science can never know for certain that something is true, Scientific knowledge is about there being a high probability that something is true.

Philosophy of Science¶

Some years ago I had a conversation with a layman about flying saucers. Because I am scientific, I know all about flying saucers! I said, "I don't think there are flying saucers." So my antagonist said, "Is it impossible that there are flying saucers? Can you prove that it's impossible?"

"No," I said, "I can't prove it's impossible. It's just very unlikely." At that he said, "You are very unscientific. If you can't prove it's impossible, then how can you say that it's unlikely?" But that is the way that is scientific. It is scientific only to say what is more likely and what is less likely, and not to be proving all the time the possible and impossible.

To define what I mean, I might have said to him, "Listen, I mean that from my knowledge of the world that I see around me, I think that it is much more likely that the reports of flying saucers are the results of the known irrational characteristics of terrestrial intelligence than of the unknown rational efforts of extra-terrestrial intelligence."

-- Richard Feynman

Arguing with Data¶

- Publication bias

- Discussion style and etiquette

- Meta analysis

- Economic Theory, why bother?

Do students learn more with instructors who receive higher evaluations?¶

Note: we are not asking about the causal relationship between evaluations and learning.

Motivation: we want to know if we can use evaluations to predict who teaches well.

- Many universities rely on student evaluations for staffing decisions. Students use them to choose courses.

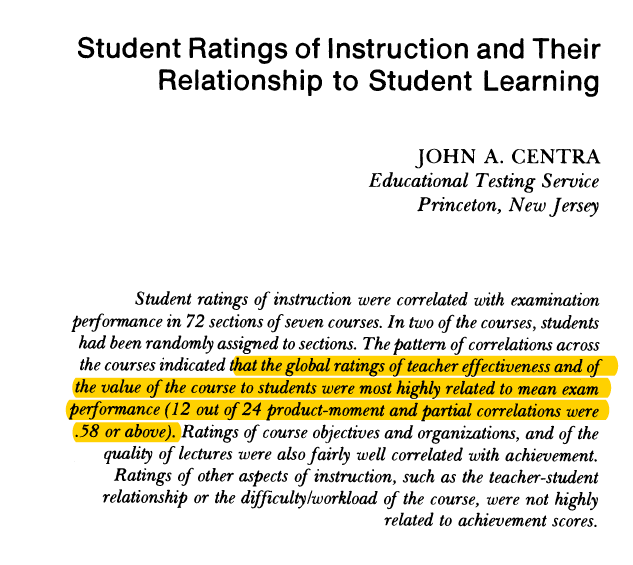

Results of a Study (Centra, 1977)¶

- In two courses, students had been randomly assigned to sections.

“…the global ratings of teacher effectiveness ….were most highly related to mean exam performance.”

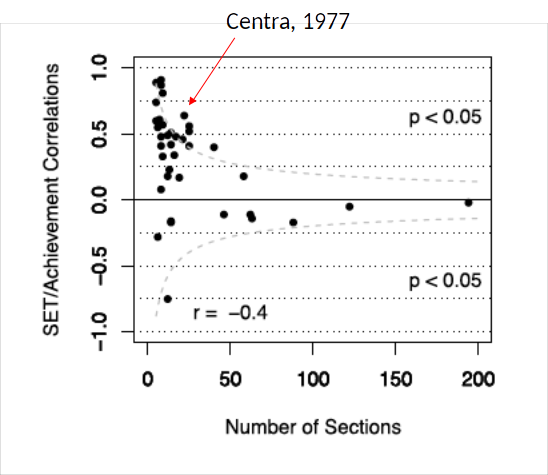

Figure from a Meta-Analysis:¶

What is going on here?

A meta-analysis is a statistical analysis that combines the results of multiple scientific studies.

Insight #1¶

- Different studies get different results.

- Take results from any one single study with a grain of salt.

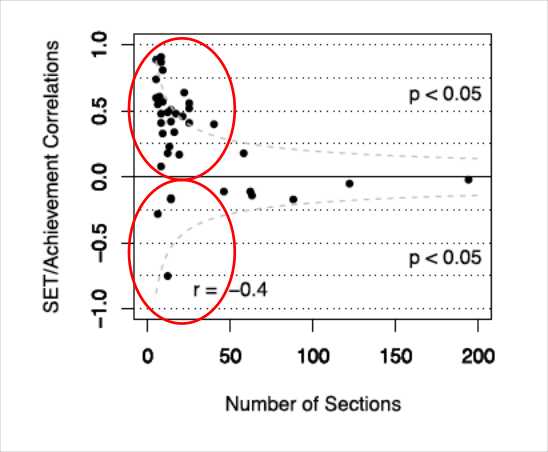

Figure from a Meta-Analysis (back again):¶

More observations $\Rightarrow$ smaller effects.

For small samples, many more positive results than negative results.

- Both of these are warning signs!

Two Quality Criteria for Estimators¶

Unbiasedness: estimator is correct on average.

Consistency: estimator is correct as sample size increases to infinity.

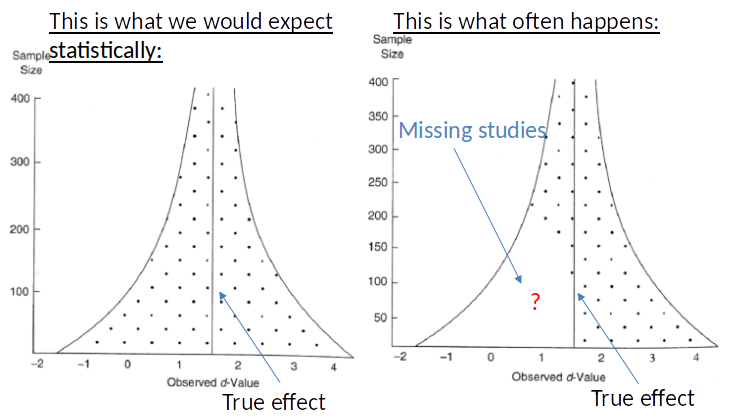

Funnel Plots: A Cautionary Tale of Misinterpretation¶

- 'Expect', based on our two quality criteria.

- Notice value of x-axis (funnel around true effect, not around zero). #### Cautionary Tale: The above sounds sensible but actually it is only true if you think the experimenter chooses the sample size at random (if they do not think about how big the sample size needs to have enough power that we might get statistically significant results: http://datacolada.org/58 )

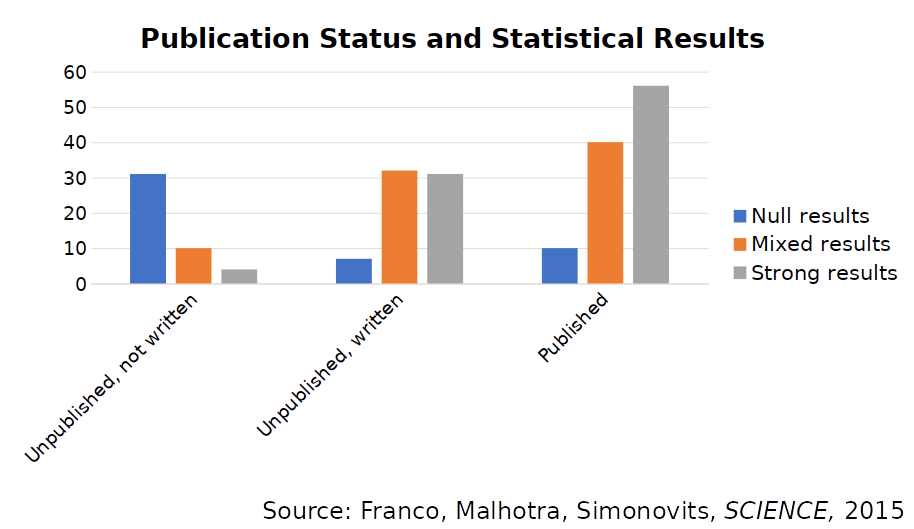

File drawer effect¶

- File drawer effect: bias introduced into the scientific literature by selective publication.

Some results are more likely to get published than others.

Guess which ones?!

Analysis of 221 started experiments¶

- Note: these were in Psychology, not Economics, but same 'file drawer effect' problem.

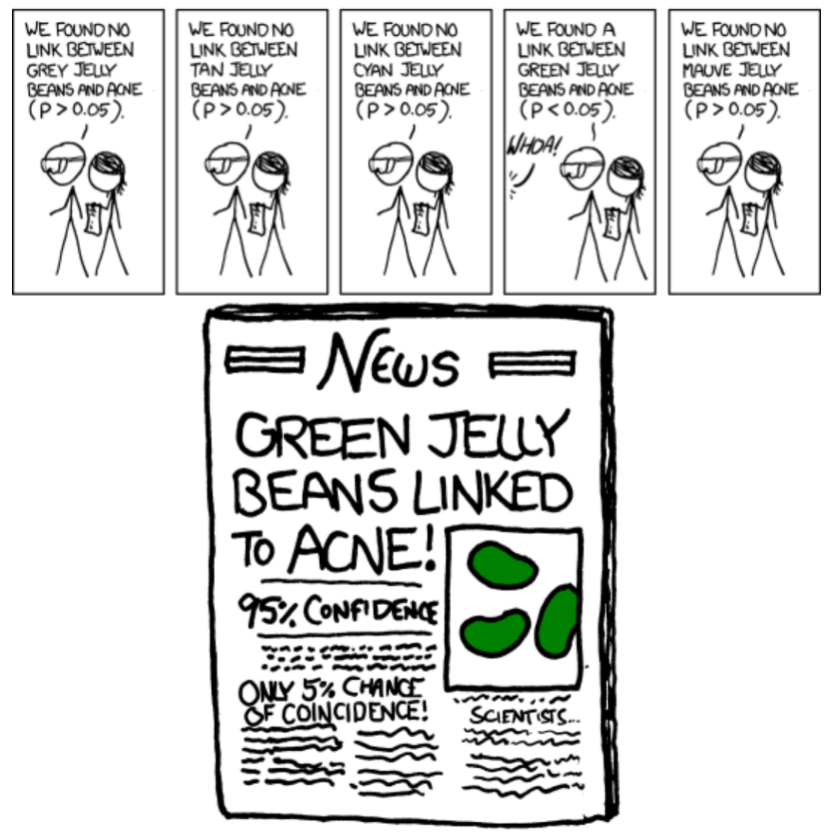

The Red M&M¶

- Relationship between jelly beans and acne? No significant result (p>0.05)

- Relationship between...

- Red jelly beans and acne? Not significant (p>0.05)

- Blue jelly beans and acne? Not significant (p>0.05)

- Yellow jelly beans and acne? Not significant (p>0.05)

- Pink jelly beans and acne? Not significant (p>0.05)

- Orange jelly beans and acne? Not significant (p>0.05)

- Peach jelly beans and acne? Not significant (p>0.05)

...

Multiple Hypothesis Testing¶

If you test multiple hypothesis, some of them will be statistically significant even if there is no true relationship.

Why? Chance!

- Using 95% confidence intervals there is a 5%, or 1-in-20, chance of a Type I Error.

There are statistical ways to adjust your p-values for multiple hypothesis testing.

So how much can we trust published findings?¶

- Open Science Collaboration tried to replicated 100 articles published in three high-ranking Psychology journals.

- They succeeded in 39 cases!

[Source: Open Science Collaboration. (2015, Science). Estimating the reproducibility of psychological science.]

- Note: Psychology is notorious as the worst performer ---mainly due to low sample sizes--- but the problem is also real in Economics.

Publication Bias¶

“Publication bias occurs when the publication of research results depends not just on the quality of the research but also on the hypothesis tested, and the significance and direction of effects detected.”¶

- Source: Dickersin (1990, JAMA). The existence of publication bias and risk factors for its occurrence.

Reasons to be careful when interpreting the results of one study:¶

- Bad research designs (e.g. omitted variable bias)

- Chance

- Publication bias

Reasons to be careful when interpreting the results of one study (less PC ones):¶

- Author's Incentives: Economists are very good at recognising that everyone else is responding to incentives. Less so at recognising that we do too, including in research.

- Most Academic Economists try very hard to avoid being influenced by such things in their Research, but unconciously this will creep in, even if just in terms of what questions we ask.

Partial Solutions¶

- Preregister experiments

- Determine before the experiment how many observations you need (power analysis)

- Encourage replications

- Conduct meta-analyses

(These are focused on fields where experiments are appropriate.)

Related book: https://www.ucpress.edu/book/9780520296954/transparent-and-reproducible-social-science-research

Jan's Personal Take¶

Science has it’s flaws but is still the best game in town when it comes to finding the truth.

There are mechanisms (peer review, meta-analysis, replications) that help us get closer to the truth.

I am usually careful when interpreting the results of a single study.

I am more convinced if a discipline has achieved consensus about a specific result.

For single studies outside my discipline (e.g. that I read about in the news), I am less able to evaluate their quality and probably more sceptical than the average person.

Robert's Personal Take¶

Science has it’s flaws but is still the best game in town when it comes to finding the truth. (Same)

I am usually careful when interpreting the results of a single study. (Same)

I am more convinced if a discipline has achieved consensus about a specific result. (Same. Replication is an important aspect of this.)

I put a large emphasis on how the result was arrived at? (Is it just correlation? Is the sample large?) As a result I am much more sceptical of the use of meta-analysis.

Theory remains important to understand how to interpret the evidence and whether the results of a given study apply to the problem at hand. (If a study finds that deworming works in Kenya, will it work in Bangladesh?)

Discussion Style and Etiquette (1/3)¶

In economics (and many other sciences), we typically focus on one very narrow question at a time.

For example, we are asking:

- What is the relationship between teaching effectiveness and teaching evaluations?

We are not:

- Asking if teaching evaluations are related to other aspects of teaching? (e.g. how comfortable students feel)

- Saying that teaching evaluations are useless.

Discussion Style and Etiquette (2/3)¶

In disputes, we aim to represent the opposing views as accurately and positively as possible. This is called steel manning.

(Rob: use of models/theory is partly about making sure cannot disagree on the 'views')We try not to build and attack a straw man – a misrepresentation of someone's position or argument that is easy to defeat.

Discussion Style and Etiquette (3/3)¶

We try to stay focused on the discussion at hand and avoid attacks based on irrelevant factors such as,

- Persons’ character

- Motive

- Person’s associated with the argument

Such attacks are called ad hominem (latin for “to the man”).

As a general rule: we try to assume that the person we’re arguing with is intelligent and has good intentions.

Final thoughts (1/2)¶

Discussing emotional topics can be difficult. When doing this, I try to be humble and calm.

It is not a sign of defeat to change my mind.

- "When the facts change, I change my mind. What do you do?" (Keynes did not say this, but it is often attributed to him.)

Final Thoughts (2/2)¶

Sometimes both points might be true at the same time or in different situations.

- Example: minimum wage may not affect employment in some situations but reduce employment in others.

I hold many beliefs.

- I have changed beliefs on some positions.

- Often smart people hold opposing belief.

- I am most likely wrong about some of my beliefs!